This piece was written in response to The Modernist Society's callout for articles with the theme factory. It's published in Issue #17 - Factory, which is out now. If you like it please consider picking up a copy! Thanks to the team at The Modernist for all their support.

What is a factory?

Most obviously, a factory is a place: a building where products are assembled on a production line. As opposed to the cottage industries that came before, a factory is a space specifically designed for efficient production: one where every stage of making something can be indefinitely measured and refined; perfect products for general consumers.

You could also say that a factory is an idea: a way of managing a job by breaking it into smaller pieces. These smaller parts can then be standardised. We don’t expect to mill our own flour before making a cake, for example: we buy a product off the shelf that will be a good quality, something we don’t have to think about any more. Standardised flour means we are free to innovate on another level: experimenting with recipes, flavours and ingredients.

The metaphor goes further. Almost everything in the modern world is made of standardised parts. A simple mains plug contains parts from all over the world. If we buy a new computer mouse we can be sure that the USB cable will fit; if we fit a new kitchen, we can be sure that our existing screwdrivers will work.

Perhaps nowhere is the metaphor of the factory more evident that in modern computer design, both hardware and software.

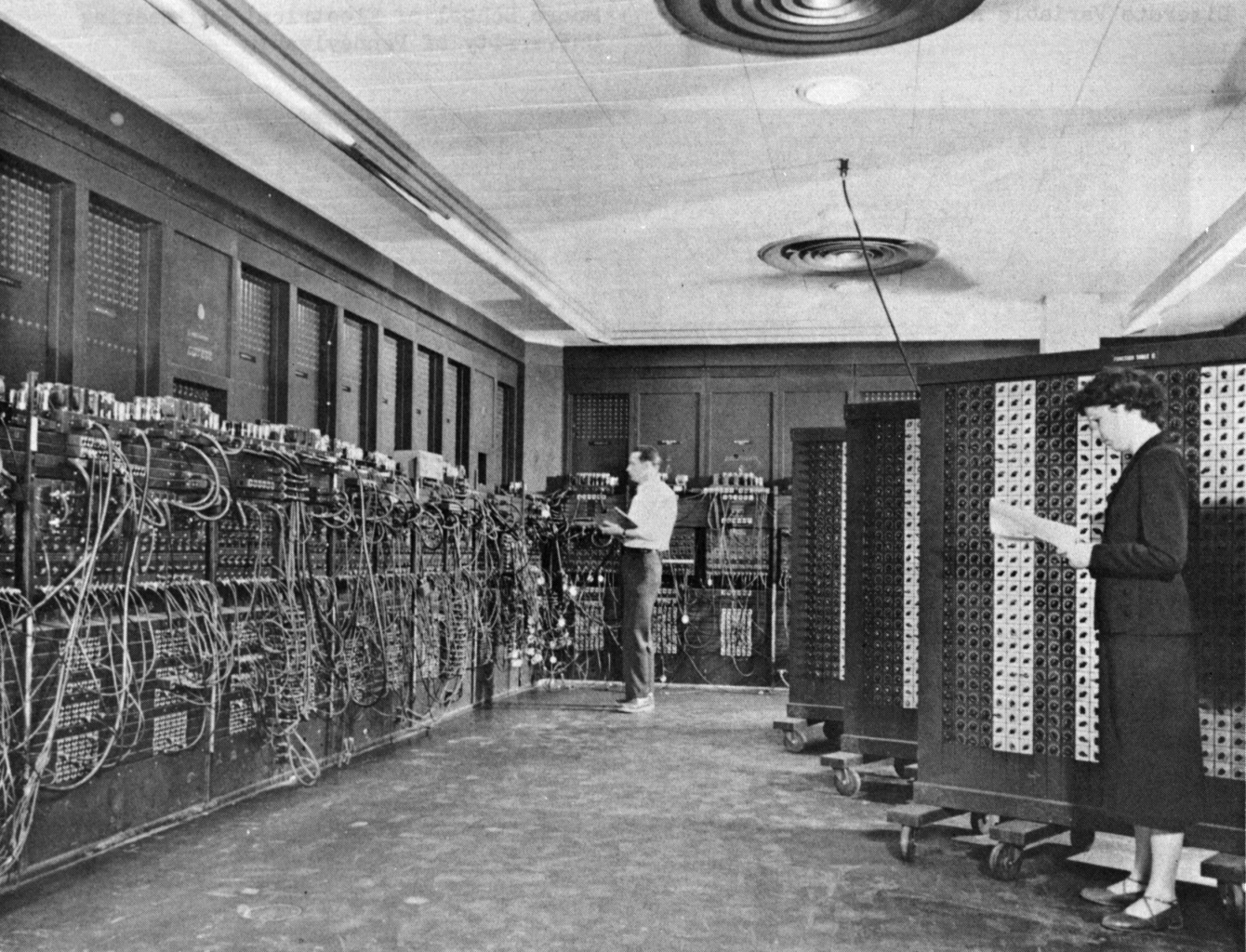

Computers at their outset were more of a cottage industry. They would be made for one specific purpose, designed from the circuit board and valves upwards to run one specific program. Eventually Turing and his colleagues created ENIAC, the first “Turing Complete”, or general purpose computer, that could be programmed to do many tasks. Still though, it ran its own custom operating system, and needed its own special parts.

IBM’s OS/360 was the first creation of a general purpose operating system, which allowed standard programming over their entire product range, and probably lead to their commercial success. Still though, this could only be run on specific IBM computers. Standardised hardware almost happened by accident. The 1981 IBM Personal Computer was so successful that it got completely reverse-engineered by rival companies such as Compaq. This then became the de facto standard: the “IBM Compatible” we simply call a “PC” today.

This standardised platform then meant that it was possible to write one operating system that would work reliably across a huge number of computers.

This is probably the first time we can claim computers were truly modernist. It is here, with both standardised operating systems and hardware interfaces that the business of iterative, research-based design can begin. Linus’s law states that “given enough eyeballs, all bugs are shallow” -- the eyeballs are not possible without everyone singing from the same hymn sheet.

From these standardised operating systems come standardised software libraries. Much like our cake metaphor, we no longer have to write the code that plays an audio file, lets us browse the operating system, or simply copy a file: we can download a library that does it for us, letting us get on with what we wanted to do in the first place. And the software for all these vital, day-to-day filesystem tasks we can be certain are almost perfect pieces of design, constantly updated by the best coders in the world: used billions of times a day without us even noticing they are there. And what is modernist design if not design we don’t notice? What is more functional than a machine we are not even aware exists?

The industrial, factory nature of the computer follows through to software design itself. Brett Victor, in his classic Magic Ink paper, tells us:

“Although software is the archetypical non-physical product, modern software interfaces have evolved overtly mechanical metaphors. Buttons are pushed, sliders are slid, windows are dragged, icons are dropped, panels extend and retract. People are encouraged to consider software a machine—when a button is pressed, invisible gears grind and whir, and some internal or external state is changed. Manipulation of machines is the domain of industrial design.”

It’s no real surprise this is the case. We still think of computers as machines, although perhaps of more modern, human devices like tablets and smartphones we do not. Webservices like Facebook are in everything and installed without asking on new phones: and yet Facebook and Twitter too are built using these layers on layers, standardised libraries using standardised computer controls, iterated constantly, each part under intense scrutiny by teams of engineers.

Like all hyper-designed systems though, perhaps this level of specialism and nested layers has lead to the current disconnect people feel with computers. It’s now almost impossible to understand how a computer works from end-to-end, even if you wanted to: computers are designed by computers nowadays. We are hugely divorced from any level of dealing with our machines directly. Would the walled gardens of services and hardware like Apple’s App Store on the iPad have been possible two decades ago, or would they have been rejected as a silly toy for its needless lock to a single vendor?

As Corey Doctorow points out, nobody has ever invented a “Turing Machine minus one” -- a computer that can do all but one job. So we are now in a world where companies are able to stop people doing things using vendor lock, but as a public we are divorced enough from what we are actually doing to not really care, and see our computers not as machines but as specialised devices with tasks pre-determined by the vendor. Perhaps Victor’s comments are already on the way out.

I’m sure the rise and rise of the Raspberry Pi has something to do with this. As a long time hacker, I find the Pi’s success curious: most of the things it does can be done on a regular desktop computer, booting a Linux OS like Ubuntu off a USB stick. People already have everything they need to mess around. And yet now computers feel like black boxes: things we are scared of poking around inside in, in case they break: and when they do break, we’re suddenly reliant on either the vendor or a geeky friend to fix it for us. But who could be scared of breaking a £20 computer that has the status of a “project”?

Computers and modern software libraries are exemplars of modernist design, both for good at bad. At once they are hopelessly well engineered examples of a factory mentality that has enabled unbridled progress, but as their complexity grows, they also become increasingly arcane and esoteric. While industrial manufacturing is on the decline in the UK, I feel the mentality of the factory -- ever increasing, component-based standardised construction -- is more popular than ever.

Thanks to Justin Hellings for some invaluable advice and technical feedback on this article.